Requests for comment/Workflows editable on-wiki

| Workflows editable on-wiki | |

|---|---|

| Component | General |

| Creation date | |

| Author(s) | Adam Wight |

| Document status | stalled |

This is a draft design of the workflow description system.

Stakeholders

[edit]- Editor community: Replace ad-hoc on-wiki processes with a well-defined workflow, rendering them transparent and consistent. We need to describe processes in a way that is readable and easy to change. Workflows can be customized on a per-site basis, or even per-user if desired.

- Extension authors: Tools like UploadWizard could be made customizable

- Fundraising: We need a formal and verifiable way of enforcing rules about how we handle donations.

- Product designers: We need to have a consistent user experience across wikis, while accounting for necessary local differences in workflows.

Glossary

[edit]- Action

- Custom code called during job execution. All actions are defined in a library. Actions may accept arguments passed in the description. Actions can be associated with a transition, or be run upon entering a state.

- Configuration

- Constant inputs to the workflow that are edited on-wiki. These variables may be referenced in the description, or stored and loaded from action code.

- Description

- Workflow model and behaviors, expressed as nested attribute-value pairs. A description may be hosted and edited on-wiki, or This data provides an outline of the workflow's actual states, transitions, and actions. Its contents are interpreted by the specific workflow implementation, so the exact details vary widely. (for example)

- Engine

- The MediaWiki extension which interprets and executes workflow specifications.

- Library

- PHP code defining a set of potential actions. This are broken into small components which can be included by a workflow.

- Job

- Active process that moves through a workflow. Jobs can be suspended and resumed during multitasking, or according to schedule.

- Signal

- Event name sent to a job. The job will move along a transition of the same name. If there is no transition matching the signal, an error is raised.

- State

- Also refers to the string value identifying which state a job is in.

- State variables

- Job-specific data accessed from action code. This must be serializable and have transactional behavior.

- Transition

- Named, unidirectional association (aka, an arrow) pointing to the next state, along which the state machine is transformed when a signal of the same name is sent to a job at the transition's root state.

- Workflow

- An abused term usually referring to the business process we are modelling.

Alternatives

[edit]- Less intrusive tools to help with onwiki process. For example, parser functions to set a 7-day reminder alarm. Queue managers to produce pages that list work items.

- Borrow more from existing workflow systems such as YAWL.

Open issues

[edit]These questions must be answered because they will have an impact on design decisions.

Humanism

[edit]- This engineered approach might seriously damage wiki process discussions, by adding a layer of arcana that only tech wizards can manipulate. We want to estimate the social impact before deployment.

- The machine-executable state machine is rarely the simplest and most readable way to explain a process. It can be confusing even when graphed as a picture. Also, capturing process descriptions will introduce weird-looking artifacts like parallel subprocesses and extra states.

Resource management

[edit]- Resource management might not be appropriate to implement in a library, we will probably have to code it into the workflow core.

Data scope

[edit]An action can only access and store data in its job scope. We might want to communicate between inner and outer, or between parallel, workflows.

Client-side workflows

[edit]We might want a javascript implementation, if multi-step frontend interactions are conveniently expressed in our workflow description language.

Hooks

[edit]Hard to say. We could allow hooks in response to various workflow events, but if the callback does anything which interacts with the workflow (which would be very tempting), then we quickly lose the visibility that actions provide. Better would be to explicitly call wfRunHooks from the action code.

Architecture

[edit]Overview

[edit]Workflows are captured as text, on-wiki in the Workflow: namespace, or on the filesystem. These text contents describe process models as network graphs, like the familiar state machine, plus some associated configuration constants. When one of these networks is executed, it waits for signal words, and may respond by making calls into a tightly restricted set of custom PHP helper libraries. Complex workflows should be broken down into a cohort of sub-networks.

The goal of this process language is to provide a readable, minimalistic, and provably secure authoring tool, expressive enough to represent the majority of controller logic present in MediaWiki, while rendering it customizable in a standard way.

Use cases

[edit]Control flow

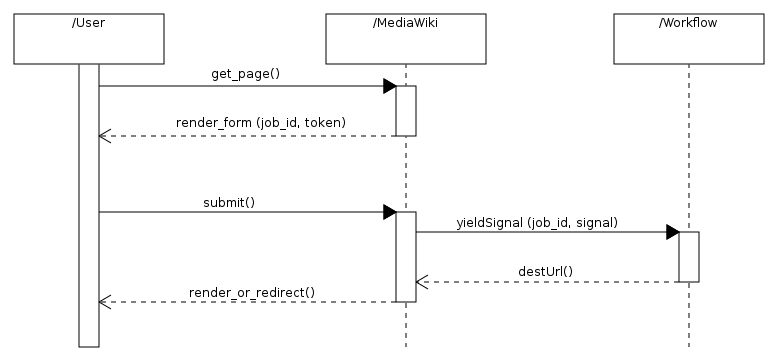

[edit]These sequence diagrams are examples of how the workflow system can be driven from MediaWiki extensions, and how user interactions take place.

Workflow state can be loaded directly and used like an ordinary variable. Here, a page is rendered from templated wikitext or a SpecialPage, and state variables are pulled in to generate the page content. The job is retrieved by ID, and perhaps the ID or a token (if the job does not belong to the current user) are embedded in the rendered content or stored in the session, to facilitate further user interaction.

When a workflow defines consecutive user interactions, we need a mechanism for the workflow engine to determine what page is rendered, or where to redirect the browser. Control flow is yielded to the workflow engine, and we are responsible for routing the next page.

MediaWiki can be used to schedule tasks which affect a workflow, for example a task which simply fires a signal at a given job_id. When the target workflow transitions, it may make calls back into MediaWiki.

Extensibility

[edit]The extension points are,

- Writing state machine descriptions, which may call actions

- Writing libraries that define new actions

Asynchronous vs. self-stimulating states

[edit]States are asynchronous by default, meaning that the job will be paused indefintely upon entering the state. The job will remain in that state until it receives another signal. A self-stimulating state provides itself with a signal, leading to a transition.

Any user interaction steps should guarantee they will self-stimulate if granted control flow, see yieldSignal(signal). The engine will throw an exception if the machine enters asynchronous wait while the user is waiting for a response. In theory we could do that e.g. to call a service, but we can postpone a decision about this.

Actions

[edit]There are only two hook points for actions, so far: when beginning a transition, and upon entering a state. Just one would have been sufficient, but some workflows could only be expressed using baroque workarounds.

Where to hook an action is determined by whether you want it to run for only one specific transition into a state, or if it should run regardless of which transition fired. The relative timing is sort of an artifact, but you could make use of that as well.

If an action has side-effects beyond changing state variables, it must implement a transactional interface. If its side-effects cannot be reversed, something horrible and ambiguous happens.

Concurrency

[edit]The base workflow is single-threaded for now, it is either not running (sitting in an asynchronous state), or performing a transactional transition. Transitions are synchronized using an exclusive mutex for each job instance.

Multithreading and joins will be defined in a library.

Data

[edit]There is only one scope for data, StateVariables which are accessible through the job's (StateMachine) get/setValue(key...) methods. These state variables are backed by the database, and behave transactionally across transitions.

Configuration (statically defined in the workflow description) and state variables share the same namespace, so be aware that state variables will override any identically-named configuration.

Each machine instance is solely responsible for its variables, there are no assumptions about the naming or structure of the data, and nothing in the engine will modify or read from your data.

Access Control

[edit]Signaling

[edit]Workflows have rules regarding who can initiate and interact. Access control could potentially vary at state, or signal granularity. Maybe libraries should be whitelisted for use in workflow descriptions—are there potentially dangerous libraries?

Tokens may be issued for special variances, redeemable via API or on the server. Tokens have an expiration time.

Data

[edit]TBD: How do we specify access to the data?

Editing workflows

[edit]Configuration may be inlined with the spec, or stored as a separate file, so that we can regulate user edit access independently.

Workflow descriptions are written as wiki content so may transclude from templates.

Access control

[edit]Access to edit publicly-executed workflows must be regulated for security. The user can be sent to an arbitrary wiki page for example, which could lead to various attacks. But the primitive elements of a job are safe for any user to execute knowingly, so we can allow personalized workflows to flourish like with the User:.../global.{css,js}

We could transclude templates in a job description, varying access level for each.

Exception handling

[edit]A specification may define global signals, which can be sent to a job in any state. This is a shortcut which expands to an implicit transition from every state in the workflow to a special exception state. An example would be, a workflow in when the user can "cancel" at any step, which fires cleanup processing and transitions to the exit node.

If a job becomes uncompletable for any reason, it should be flagged as permanently frozen, and cleanup performed outside of the workflow system. There is no "universal" exception mechanism to catch unexpected errors.

ACID

[edit]The easiest way to understand atomicity in workflows is to look at the steady-state, this is when a job has arrived in a state and processing stops. Every transition between these steady-states must be atomic, the machine cannot be paused, and changes will be rolled back in case of error.

Each transition will be protected by a database transaction on the state variables, and any side-effects should be transactional as well. Library actions are responsible for guaranteeing that any side-effects are rolled back if the transition fails, even if it fails from another action. TODO: design the interface for actions with side-effects.

Versioning

[edit]Modifications to a production workflow are tricky, because there may be jobs in the queue already. The base behavior is that the system caches each revision of a workflow description, and jobs are version-locked to the description used to initiate them. Job migrations are always explicit, even when they are a no-op.

If no version is available, for instance, the description came from the filesystem, then a SHA-256 hash of the description contents is used in its place.

Any workflow description that is used in production will be cached in the database indefinitely.

Verifiability

[edit]It's very nice to be able to make "true" statements about how jobs in a workflow will behave. To maintain this property, no tool will be able to set a job to an arbitrary state.

There is one exception, job migrations must be able to map states between description revisions.

Diagnostics

[edit]Jobs will be logged as they move through a workflow, including any signals received or actions performed.

EventLogging schemas

[edit]// EventLogging Schema:WorkflowJobStarted

{

"description": "New workflow job started",

"properties": {

"jobId": {

"type": "integer",

"required": true,

"description": "Workflow job ID"

}

}

}

// EventLogging Schema:WorkflowActionFired

{

"description": "Workflow job fired an action",

"properties": {

"jobId": {

"type": "integer",

"required": true,

"description": "Workflow job ID"

},

"state": {

"type": "string",

"required": true,

"description": "Machine state"

},

"actionAssociation": {

"type": "string",

"required": true,

"description": "Enum {transition, enterState}"

},

"actionClass": {

"type": "string",

"required": true,

"description": "Action classname"

},

"actionParameters": {

"type": "string",

"required": false,

"description": "Arguments passed to the action (JSON-encoded)"

}

}

}

// EventLogging Schema:WorkflowSignalReceived

{

"description": "Workflow job has received a signal",

"properties": {

"jobId": {

"type": "integer",

"required": true,

"description": "Workflow job ID"

},

"state": {

"type": "string",

"required": true,

"description": "State before the transition"

},

"signal": {

"type": "string",

"required": true,

"description": "Signal"

}

"stateVariables": {

"type": "string",

"required": false,

"description": "State variables before the transition (JSON-encoded)"

}

}

}

// EventLogging Schema:WorkflowTransitionRollback

{

"description": "A workflow transition was rolled back",

"properties": {

"jobId": {

"type": "integer",

"required": true,

"description": "Workflow job ID"

},

"state": {

"type": "string",

"required": true,

"description": "State before the transition"

}

"transition": {

"type": "string",

"required": true,

"description": "Transition"

},

"reason": {

"type": "string",

"required": true,

"description": "Exception message"

}

}

}

In debug mode, we might want to version state variables or dump them at each step.

Proposed implementation

[edit]Roadmap

[edit]Phase 0 (design)

[edit]- Agree on requirements

- Specification

Phase 1 (MVP)

[edit]- Server implementation: ACID, secure, API, persistence, logging, extensible, versioned

- Action libraries for: alarm, hierarchy, debug print, basic page flow (goto, URL params and session namespace)

- Basic definition editor, validation

- Goal: pilot deployment

Phase N

[edit]- Respond to feedback

- Client implementation

- Concurrency, decision and IPC libraries

- Performance optimization

Diagrams

[edit]

Example workflow

[edit]Moving parts

[edit]- Default descriptions - your base workflows, which may be overridden downstream

- Default configuration - reasonable default values for all parameters

- Libraries - If the workflow involves anything novel, you may have to implement it as an action in PHP.

Example: Articles for Deletion

[edit]

Workflow description

[edit]name: Articles for Deletion Queue

# TODO: flesh out

# The AfD extension will hook on article save, and will check the article

# content for new deletion tags. If this condition is present, we

# instantiate a new AfD job with the new revision as its argument, and

# begin the workflow.

#

# The workflow is split up into parallel and child workflows, a strategy

# that should be used liberally, everywhere. We use the same implementation

# for all specifications here out of laziness, but there are really three

# archetypes: discussion queue, provisional endorsement, and admin review.

#

# The AfD workflow can receive the following signals:

# extend

# keep

# delete

libraries:

# Allows us to respond to user requests with a wiki page

- WikiPages

# Enables synchronous states by sending self a signal

- SelfStimulating

# Send self a signal in the future

- Alarm

# Tag pages and fork depending on existing tags

- TaggedPage

# Provides limit_jepoardy and delete_in

- ArticlesForDeletion

states:

# Note that states and actions use both list and map syntax. Sorry. This

# is because they must have a well-defined order.

- Start:

actions:

# Append this article to the AfD discussion page, then signal "open"

- add_to_afd_queue

# This is a soft keep. If a child workflow later acts on "delete_in",

# the expiration date and default outcome will be overridden.

- keep_in: normal_grace_period

# Takes a map from deletion tag name to workflow specification title.

# A child workflow is begun, which can signal back to this machine.

- fork_on_tag:

PROD: Proposed Deletion

BLP-PROD: Proposed Deletion, Biographies

CSD: Speedy Deletion Queue

Copyvio: Copyright investigation

transitions:

open: Discussion

- Discussion:

transitions:

# There is logic in here to limit total time open to maximum_discussion.

extend: Discussion

# Proposed Deletion

- PROD:

actions:

# Only allow PROD once per article. On successive invocations,

# automatically send a "keep" signal and wait for admin review.

- limit_jepoardy: 1

- scan_tags

# Sets an alarm to run

- delete_in: normal_grace_period

transitions:

# signaled by the implementation when the template is removed from the article, or

keep: Keep

#

delete: Delete

- Keep:

actions:

- signal: review

# No transitions, this is a final state

- Delete:

actions:

- signal: review

# No transitions, this is a final state

- Review:

transitions:

endorse: End

reverse: End

# Shoot us out of the state machine if premature renomination for deletion is demonstrated.

exceptions:

early_renomination: Keep

# Constants to be customized

configuration:

normal_grace_period: 7 days

longer_grace_period: 10 days

maximum_discussion: 21 days

afd_queue_page: "Wikipedia:Articles for deletion"

deletion_review_queue_page: "Wikipedia:Deletion review"

Further reading

[edit]- Research:Wikipedia Workflows

- meta:Workflows (a rough scratchpad of notes)

- Wikipedia:Deletion policy

- Wikipedia:AfD

- Petri net

- Requests for comment/Tickets