Developer Satisfaction Survey/2019

Background

[edit]During FY2019 annual planning, Developer Satisfaction was identified as a target outcome for the Wikimedia Technology department's Developer Productivity program.

In our first attempt at measuring progress towards this target, the Release Engineering Team conducted a survey in which we collected feedback from the Wikimedia developer community about their overall satisfaction with various systems and tools. We followed with a round of interviews focusing on the local development environment. This page attempts to summarize the feedback into some numbers as well as broad themes that we were able to identify in the feedback received.

Since the privacy of respondents is important to us, we will not be publishing the raw responses, instead we will roughly paraphrase the most common complaints, suggestions and comments, along with some stats and other observations.

What we asked (Survey)

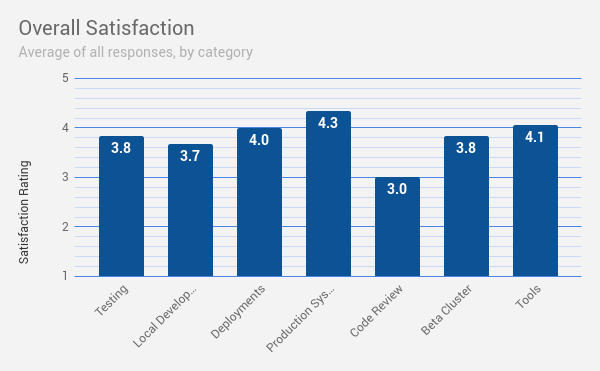

[edit]Respondents were asked to rate their satisfaction with several broad areas of our developer tooling and infrastructure. Each area was given a rating of 1 (very dissatisfied) to 5 (very satisfied). This was followed by several free-form questions which solicit feedback in the respondent's own words. The satisfaction ratings have been summarized by taking the average of all responses in each category.

Who responded (Survey)

[edit]In total, we received 58 responses. Of those, 47 came from Wikimedia staff/Contractors, additionally, 10 were from Volunteers and just 1 was from a 3rd Party Contributor. In the future we should make an effort to reach more volunteers and 3rd party developers. For this first survey, just keep in mind that the data are heavily biased towards the opinions of staff and contractors.

What we asked (Interview)

[edit]Respondents were asked some general questions about their local environment setup and progressed to conversation and show-and-tell about their local environment. Interviewers recorded notes.

Who responded (Interview)

[edit]In total, there were 19 interviews. Of those, 16 were with Wikimedia staff/Contractors. 2 were with Volunteers, and just 1 from a 3rd party.

There were 9 Mac users, 9 Linux users, 2 Windows users, and 1 other user (users may use multiple systems).

Aggregate Scores (Survey)

[edit]

Analysis (Survey)

[edit]Most of the responses could be summarized as simply "satisfied" with most of the categories averaging near 4. Below we will discuss notable exceptions to this generalization and any other observations that can be gleaned from the available data.

One thing stands out immediately when looking at the average scores:: Code Review is the lowest score by quite a margin. At 3.0 it's pretty far below the next lowest score which was Local Development at 3.7. Given that it was not possible to respond with zero in any category, effectively the lowest possible score is 1. That number is even worse if we look at just the responses that we received from Volunteers. That subgroup gave code review a very disappointing average rating of 2.75, with Wikimedia staff and contractors averaging 3.42.

Qualitative Analysis of Respondent Comments (Survey)

[edit]We attempted to divide the content of respondent comments into several categories to identify common themes and areas for improvement. We created diagrams with the comments in each theme in order to come up with "How can we..." questions that will help kick off future investigation and brainstorming for improvements in each category.

Categories

[edit]- Automated Testing:

- It’s hard/intimidating to run tests on mediaWiki locally - 10

- Not enough docs for running tests locally / unclear how to run tests - 5

- Can’t run tests separately (locally) - 4

- Tests don’t pass on mediaWiki locally (possible configuration issues) - 4

- Running tests locally takes too long - 4

- Testing and risk mitigation aren’t stopping bad code from being merged - 3

- Local testing results don’t match CI results - 3

- Code coverage for php unit tests on mediawiki is too low - 2

- Having many different types of tests is confusing - 2

- Timeouts happen when running lint and codesniffer on mediawiki core - 1

- Environment is destroyed after failed tests - 1

- Can’t run all the tests locally - 1

- Can’t see code coverage tests in IDE - 1

- Adding code coverage tests for extensions is too complicated - 1

- Build failures aren’t caught before merge - 1

- Tests aren’t reliable (randomly fail?) - 1

- Tests run pre-merge aren’t the same as post-merge causing problems - 1

- Untrusted users can’t run tests - 1

- Can’t test components in isolation - 1

- Collaborating:

- I don’t know how to get visibility for my bug - 1

- I don’t know how to configure log levels on mediawiki - 1

- I don’t like phabricator - 1

- I have a hard time keeping track of sprints in phabricator - 1

- I can’t unlink a wikidata property ID in phabricator - 1

- Lots of duplicate tasks are filed in phabricator - 1

- Phabricator UI changes without warning - 1

- Phabricator is bad for everything but task management - 1

- It is hard to get responses on phabricator tasks - 1

- I have trouble collaborating with wikimedia staff members - 1

- Many pages aren’t being watched - 1

- I feel unwelcome and without a community - 1

- Debugging:

- it’s hard to debug production - 1

- Deploying:

- Deployment takes too long and is complicated/very manual - 14

- The deployment schedule isn’t frequent enough - 6

- It is hard to deploy a new service to production - 6

- I can’t deploy on my own / whenever I am ready - 3

- Deployment schedule is too variable - 2

- Canary releases don’t use enough metrics / not configurable - 2

- No good coverage for performance regressions - 2

- The deployment schedule is inconvenient for me - 1

- Not enough people have access to deployment tooling - 1

- too many tests use live traffic - 1

- No health checks - 1

- SWAT deployment delays - 1

- Deployment process to staging environment unclear - 1

- I don’t know when deploys are blocked - 1

- Deployers don’t have knowledge to debug problems - 1

- Canary release process is not clear - 1

- Developing

- CI takes too long - 24

- It is hard/inconvenient to configure CI jobs for repos - 18

- I don’t like using gerrit - 17

- Documentation isn’t tied to the actual code - 3

- gerrit workflow is hard to understand - 3

- it’s hard to write documentation for multiple versions - 2

- CI runs tests unnecessarily for users with more rights - 1

- Don’t like jenkins job builder - 1

- Gearman queue crashes - 1

- It is hard to share code with others - 1

- it is hard to get a gerrit account - 1

- I can’t use gerrit (well?) on mobile - 1

- the new UI for gerrit is hard to use - 1

- local dev environment is slow and hard to use - 1

- I don’t know how to interact with gerrit - 1

- documentation doesn’t clearly explain use-cases - 1

- I don’t know how to develop on mediawiki - 1

- Finding Things

- it is hard to find docs / they don’t exist - 15

- It’s hard finding the right diffusion repo - 2

- I can’t search all repos with code search - 1

- I can’t search tools with code search - 1

- I don’t feel the code search feature is useful - 1

- Deployment Error logs are hard to discover - 1

- Gaining Knowledge

The Gaining Knowledge analysis produced the following questions:

How can we...- help developers discover code maintainers/reviewers?

- enable developers to configure alerts?

- ensure the right person sees alerts?

- make logs easily discoverable to developers?

- help developers use logs for analysis?

- help people discover and access documentation?

- ensure documentation is always relevant?

- ensure documentation is complete?

- share information about the production environment?

- present information from reports in a useful way?

- develop and share the deployment process?

- Getting Reviews

- Have to wait too long for reviews - 16

- Have to wait too long for merges - 15

- Review/merge culture/process is not well established - 13

- Teams aren’t prioritizing or don’t have time for reviewing/merging - 8

- Security review for new services takes too long - 2

- teams don’t see open patches requiring review/merge - 1

- UI Testing

The UI Testing analysis produced three questions:

How can we...- do better catching bugs before they hit production?

- enable quick and easy production-like testing environments for developers?

- share information about the staging environment?

Qualitative analysis of interview notes

[edit]Responses in the form of notes were coded into problems, which were then grouped into categories in an attempt to reveal common issues. The color coded mind maps were created to aid analysis.

-

Local Dev Environment Responses on Configuration Mindmap

-

Local Dev Environment Responses on Contributors Mindmap

-

Local Dev Environment Responses on Sharing Mindmap

-

Local Dev Environment Responses on Standards Mindmap

-

Local Dev Environment Responses on Documentation Mindmap

-

Local Dev Environment Responses on Debugging Mindmap

-

Local Dev Environment Responses on Setup Mindmap

-

Local Dev Environment Responses on Maintenance Mindmap

-

Local Dev Environment Responses on Internet Connections Mindmap

-

Local Dev Environment Responses on Performance Mindmap

-

Local Dev Environment Responses on Non-categorized things Mindmap

-

Local Dev Environment Responses on Ease of Use Mindmap

-

Local Dev Environment Responses on Running Tests Mindmap

-

Local Dev Environment Responses on Continuous Integration Mindmap

-

Local Dev Environment Responses on Databases Mindmap

Analysis produced the following How can we... questions:

- make documentation easier to read?

- make documentation easier to access?

- define clear standards for users?

- make the local development environment easier to setup?

- make the local dev env easy to configure and use?

- make easily shared environments?

- help non-devs have an environment?

- decrease resource usage?

- speed up setup, load, and run times?

- avoid discouraging contributors?

- achieve more parity with production?