Article feedback/Call to action

This page presents a high-level summary of the latest findings on the effects of call to actions (CTAs) and discusses recommendations for future design.

Current CTAs

[edit]As of AFT v.4 three different CTAs have been implemented and tested: Edit, Join and Survey.

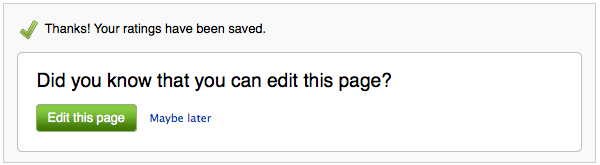

Edit this page

[edit]The Edit CTA invites users to edit an article after rating it. The CTA is non-conditional, i.e. it doesn't depend on the actual rating values submitted by the user. The analysis of this CTA across different AFT versions suggests that on average 1 out of 6 users who see this CTA accepts the invitation (click-through rate: 15.1% (v.3) - 17.4% (v.4)). This makes Edit CTAs the second by rank after the Survey CTA in terms of click-through rates. If we consider the average completion rate, 15.3% of users who accepted the invitation successfully completed an edit, which corresponds to a net conversion rate of 2.7% of all users who saw the CTA (v.4 data). These rates show that a relatively high number of users are potentially interested in contributing but only a small fraction (although still sizable in terms of absolute numbers) actually do so.

Discussion. Given (1) the current positioning of the AFT at the bottom of the page, (2) the fact that it's not actually loaded until users scroll to the bottom of the page and (3) the fact that we know that rating conversions (i.e. the number of people rating an article after reading it) decay very rapidly with the page length, it's highly unlikely that rating may be distracting people from editing the article (people interested in editing would have done so using the main edit tab or section edit links) even if we currently do not have data to test this hypothesis. If this hypothesis holds, though, we can reasonably assume that edits produced via the Edit CTA, however small in volume, are additional to regular edits. A second issue that needs to be considered next to the edit volume is the quality of edits generated by the CTA. We currently do not have data to measure how good these edits are compared to regular edits (in particular if they have a higher or lower chance of being reverted).

Suggestions

- Track edits generated via this CTA and users who make their first edit via this CTA

- Follow up with users after their first edit

- Target the Edit CTA to problematic sections of an article by making it conditional and granular (see below)

Create an account

[edit]The Join CTA invites people to log in or to sign up after rating an article. The CTA is non-conditional, i.e. it doesn't depend on the actual rating values submitted by the user. The click-through rate for Join AFT is the lowest among the CTA we implemented, with 4.7% of users on average accepting to sign up and 3.5% of users accepting to log in (v.4 data). Completion rates are not available for this CTA. The click-through rates by themselves show that a small number of people are interested in creating an account as a result of rating an article.

Discussion. The low click-through rates confirm that people do not feel a compelling reason to sign up after rating an article. This is likely to be the case because of how decontextualized this CTA is with respect to the action of rating an article. It's also plausible to assume that part of the clicks on the login button may be target errors, so the actual CTR for signup would be between 3.5% and 8.2%. We should expect a higher click-through rate if we give a specific reason to raters to create an account after rating.

Suggestions

- Provide context as to why users should create an account

- Reduce potential target errors by removing the log in button

Take a survey

[edit]The Survey CTA invites people to take a short survey after rating an article. The survey is both related to the article and to the feedback tool. The CTA is non-conditional, i.e. it doesn't depend on the actual rating values submitted by the user. The click-through rate for the Survey CTA is the highest among the CTA we implemented, with more than 1 out of 3 users (37.2% (v.4) - 40.6% (v.3)) on average accepting to take the survey. Completion rates are also the highest we obtained among all CTAs with 64.8% (v.4) to 66.3% (v.3) of all users who clicked on the CTA successfully completing and submitting the survey: this corresponds to a net conversion rate of 24.1% of all users who saw the CTA (v.4 data).

Discussion. The high completion rate for this CTA indicates that users who rate an article are on average happy to provide further feedback. However: in terms of quality, how do we make sure that feedback provided is actionable; in terms of engagement, how can we further retain users who rated an article. As for quality, obtaining detailed feedback in the form of a post-rating survey is very straightforward and we could increase the completion rate even further by skipping the intermediate step of inviting users to take the survey and simply displaying it upon submission of the ratings. A lot of valuable feedback is currently available in the survey's free-text comments, but it's hard to tell apart constructive feedback from spam or useless comments. If we wish to surface good comments only, we should expose per-page feedback via a queue where registered users can decide to promote the good comments to a talk page. This however doesn't address the problem of how to further engage a rater who won't be aware of a potential thread triggered on a talk page by his/her comments (unless we can follow up with him/her via email). As far as direct user engagement is concerned, the high completion rate suggests that this is the most promising among the CTAs we experimented with as an entry vector and we should use conditional CTAs to maximize the usefulness of feedback we collect via the post-rating survey and to further engage the user (see below).

Suggestions

- Remove intermediate invitation

- Allow registered editors to review free-text comments

- Make feedback actionable (tying it to article sections/conditional on ratings)

- Notify users when feedback is promoted to talk pages

Proposed CTAs

[edit]Rate another page

[edit]One of the problems we are facing with the current AFT design is the very low number of users who rate multiple articles (roughly 2% of anonymous raters). Being able to track multiple ratings by the same user would offer several benefits:

- measure individual rater bias against a control group of users who rated the same articles

- use these rater metrics to weigh or filter individual ratings when generating aggregate quality scores

- actively engage with the user as a potential editor: by selecting similar articles to read and rate we could increase the volume of ratings but also actively engage the user for a longer timespan. If we then were to display a page with all non-expired ratings submitted by an individual user based on topics s/he likes we could use this as an important entry point for account creation or WikiProject recruitment (by analogy, think of the recommendation engine used by NetFlix to maximize the information they collect about a user's taste and interests, while contributing to increase the volume of ratings across their database). Note that there has been a request to have an "articles I rated" page.

Discuss this page

[edit]Several users suggested we should introduce a CTA to directly invite raters to send their feedback via article talk pages. This is problematic for two reasons: the risk of driving a lot of spam to the talk page, lacking a system to filter these comments (as described above); the risk of discouraging anonymous users from commenting if we expose them to a public space like a talk page. An intermediate solution to increase the barriers for potential spammers and the accuracy of feedback is to ask users to select which section of the article their comments refer to (by pulling a list of section headings from the article in the form of a dropdown menu) and by asking them to further qualify their feedback (is this a suggestion to expand a section? To add more sources? To flag a section of the article as biased?) before they can submit it. This would make feedback actionable and granular. Feedback granularity is another request that was raised in the AFT discussion page.

Conditional CTAs

[edit]We haven't experimented with conditional CTAs, i.e. CTAs that are triggered as a function of the ratings submitted by the user. Conditional CTAs are likely to be more effective than non-conditional CTAs to get users to complete a task and to increase the accuracy of the feedback we collect.

These are examples of conditional CTAs triggered by low scores:

- a low score on the Trustworthy dimension not paired with a low score on other dimensions should generate a CTA asking the rater to suggest sources to improve the article.

- a low score on Well-written should trigger a CTA inviting the rater to edit the article to improve its readability (possibly by using section editing as suggested above).

- a low score on "Complete" should trigger a CTA inviting the rater to suggest a new section or a subsection to an existing section.

- a low score on "Objective" should invite the rater to identify the specific section perceived as biased and to submit links or sources to support this claim.

Conversely, a high score across the board could be used to engage the rater in social interaction, e.g. trigger a CTA asking the rater to praise the contributors of the article. This could be done by sending WikiLove to the top 5 contributors of the article or, more strategically, to the most recently registered users who contributed to that article.

Summary

[edit]CTAs represent effective vectors to assign readers to specific tasks that can help improve the quality of articles or increase their active engagement with our contents and community. To improve the effectiveness of CTAs for quality assessment and user engagement we need to:

- Identify strategies to engage with users after a CTA is completed

- Make feedback generated via post-rating CTAs as granular as possible

- Assess the risks of spammy behavior when inviting users to directly edit articles or talk pages