VisualEditor on mobile report

This page is a report on mobile editing using the visual editor that is being produced as part of the Editing team's work from the 2018-2019 annual plan. This report is the foundation of this year's work, described at the VisualEditor on mobile project page.

Strategic direction

[edit]The Wikimedia Foundation 2030 strategic direction calls on the Foundation to invest in knowledge equity, which “means focusing on the knowledge and communities that have been left out by structures of power and privilege, and welcoming people from every background to build strong and diverse communities."

The Annual Plan for FY2018/2019 lays out how the Foundation aims to achieve these goals by focusing on the diversity of contributors and content as well as the need to ensure that mobile devices are fully supported in order to empower people in underrepresented regions and languages.

The Audiences department is focused on improving the mobile editing experience. Within the department, the Editing team is working to improve the experience of visual-based mobile editing by: Conducting research to understand the current visual-based mobile editing experience and define areas for improvement (Q1) Working with the community to determine a strategic approach to building tools (Q2-Q4) Developing and testing solutions to ensure pain points have been lessened or removed (Q1-Q4)

Objective of this report

[edit]The objective of this report is to document the current state of visual-based mobile web editing, and to identify any challenges that exist. This report will:

- explore and document the end-to-end workflow for current users

- define pain points in the workflow and larger scale problems outside the workflow

- make proposals for addressing identified pain points

- validate thinking and stimulate discussion with both senior management and the communities

This report is intended to define the current visual-based mobile editing experience and identify areas of challenge. The report will be updated based on feedback, but ultimately it’s intended to be static documentation of the current understanding of mobile editing (as of Q1 2018), and not to be a moving target to document of ALL the things. The Audience team will build upon this work and ultimately all action items will go into Phabricator tasks.

Definitions

[edit]- mobile editing – Mobile Editing refers to any kind of contribution made to Wikimedia through a phone or tablet. Mobile content creators and editors access Wikimedia through native or web apps. The number of people who are using mobile devices to access Wikimedia projects is constantly increasing. Many users access Wikimedia sites exclusively this way. In particular, emerging Wikimedia communities have many more primary and exclusive mobile users compared to existing communities.

- visual editor – the current technical implementation in the browser. Editing using wikitext is a barrier for many mobile contributors, due to the unfamiliar markup system and the difficulties created by the use of mobile devices in general. For example, smaller screens allow contributors to see and preview only a fraction of a page at a time, and the absence of common markup characters, such as

'and[, on the devices' main keyboard views raise the bar for technical know-how required to easily contribute. The visual editor within the mobile web experience solves many of the pain points that contributors have with wikitext on mobile, however, it has usability, user experience, and performance issues. ..

- visual-based mobile editing – An experience that allows an editor to see what the end result will look like while the edit is being created. This is the general term for the approach to creating this kind of edit.

- mobile web – The use of the internet through a handheld mobile device such as a phone or tablet.

- mobile site – A more mobile-friendly way of reading or editing Wikipedia or another project on the mobile web. It uses MobileFrontend in the user's mobile web browser, instead of the mobile apps. This page can be seen on the mobile site at https://m.mediawiki.org/wiki/Mobile_editing_using_the_visual_editor_report . The mobile site is used on both mobile devices and desktop computers.

- desktop site – Wikipedia or related sites in a form optimized for desktop users. This page can be seen on the desktop site at https://www.mediawiki.org/wiki/Mobile_editing_using_the_visual_editor_report . The desktop site is used on both mobile devices and desktop computers.

History of visual editing on the mobile web

[edit]Visual editing on Wikimedia has evolved significantly over the years. Some highlights include:

- Summer 2012: Wikitext editing on mobile Web was developed initially as a beta, and then was released for all logged-in users in July 2013.[1]

- Autumn 2013: Visual editing on mobile Web was developed alongside the desktop release. It was initially released to tablet-based logged-in beta users in October 2013, with a "switcher" to let users move back and forth between editors; pressing the edit pencil would always initially open the wikitext editor.

- 2014: Visual editing was rolled out more widely, and has been opt-in available to all users on all devices for years up to September 2018.

- November 2014: Mobile Web wikitext and visual editing was expanded to logged-out editors, initially on the Italian Wikipedia[2]

- April 2015: Logged-out visual editing on mobile Web was expanded to all wikis.[3]

Quantitative metrics

[edit]See T202132.

One way that product teams gather an understanding of how their products are being used is to use quantitative metrics.

Below are some metrics that the Editing team is interested in, as well as a brief explanation of each one.

EventLogging metrics

[edit]The metrics in this section were calculated from the Edit event stream, which uses EventLogging to track various events that happen as users move through the process of making a edit. This means that these metrics share certain caveats:

- This event stream has a sampling rate of 6.25%, meaning that roughly speaking only one of every sixteen edit sessions sends data for analysis.

- EventLogging does not send events if the user has enabled Do Not Track in their browser.

- These metrics are based on the events recorded during May 2018, so they may not reflect the long-term average.

- A session's platform (mobile or desktop) was identified based on whether the user was using the desktop website or MobileFrontend. Since a user can choose to switch to the desktop website on a mobile device or vice versa, the desktop platform includes some edits made on mobile phones or vice versa.

- This data is observational rather than experimental, so metric differences between the editing interfaces may be due to the type of users who choose that interface rather than the interfaces themselves.

- Since users must opt in to using the mobile visual editor, its metrics are based on a small number of session (see table below) from users who are likely better informed than average.

| editor | desktop | mobile |

|---|---|---|

| visual editor | 331,421 | 20,643 |

| wikitext editor | 3,367,759 | 1,912,178 |

Usage of common editing features

[edit]Explanation: understanding which common editing features people use (or do not use) may affect our direction in terms of possibly building stripped-down experiences with fewer features.

This data is now being captured in Schema:VisualEditorFeatureUse. This data includes only sessions with a saveIntent event, so that sessions where the user doesn't try to do anything at all don't affect the percentages.

| desktop | mobile | |

|---|---|---|

| any basic feature | 40% | 28% |

| add or modify images | 4% | 1% |

| add or modify citations | 17% | 7% |

| add or modify links | 26% | 20% |

| add or remove text formatting (bold, italics, superscript...) |

9% | 8% |

| any feature (basic features plus templates, page settings, find and replace, etc.) |

55% | 45% |

Edit completion rate

[edit]See T202134.

An edit session starts when a user clicks the edit button, but most sessions don't result in a saved edit. The edit completion rate gives the proportion of sessions which do result in a save, out of all those where the editor has a chance to fully load. This metric can help us figure out which platforms are more difficult for users to use.

| editor | desktop | mobile |

|---|---|---|

| visual editor | 12.6% | 15.8% |

| wikitext editor | 24.6% | 2.6% |

| overall | 22.5% | 2.7% |

Desktop

[edit]On desktop, the visual editor has a lower overall completion rate, but this is due to fact that most* first-time IP editors get VE by default, and that group has a higher rate of abandoning the edit session. Only 5.2% of IP editors who use VE complete their edit. (It's likely that many of them clicked the edit button by mistake, or out of curiosity to see what happens.) More savvy IP editors who switch to wikitext have a higher completion rate, 6.4%.

For logged-in editors of all experience levels on desktop, VisualEditor has a higher completion rate.

*nl.wiki, es.wiki and en.wiki for example do not default to VisualEditor for first-time IP contributors editing on desktop. Instead, they are shown the wikitext editor. See VisualEditor/Rollouts for more details.

| edits | visual editor | wikitext |

|---|---|---|

| Overall | 12.6% | 24.6% |

| IP editor | 5.2% | 6.4% |

| 0 edits (new editor) | 27.8% | 18.0% |

| 1-9 edits | 45.3% | 35.5% |

| 10-99 edits | 57.6% | 48.8% |

| 100-999 edits | 65.3% | 58.4% |

| 1000+ edits | 73.3% | 71.7% |

Mobile

[edit]On mobile, the situation is reversed. First-time IP editors get wikitext by default, and there's a very high abandonment rate -- only 1.5% of mobile IP editors complete their edit with wikitext. The IP editors who switch to VE have a higher completion rate, 11.4%.

For logged-in editors of all experience levels on mobile, the wikitext editor has a higher completion rate.

| edits | visual editor | wikitext editor |

|---|---|---|

| Overall | 15.8% | 2.6% |

| IP editor | 11.4% | 1.5% |

| 0 edits (new editor) | 17.4% | 21.9% |

| 1-9 edits | 28.0% | 38.7% |

| 10-99 edits | 38.1% | 45.8% |

| 100-999 edits | 52.1% | 55.9% |

| 1000+ edits | 58.1% | 62.9% |

Drop-off along the edit funnel

[edit]See T202147.

To better understand the majority of edit sessions where the user does not save an edit, we can look at where in the the process users tend to drop off. This can help us target our improvements on those steps.

Caveat: this overall chart does not include loaded or saveIntent since not all editors log those events.

| event | % of sessions reaching |

|---|---|

| init | 100.0% |

| ready | 63.9% |

| saveAttempt | 7.9% |

| saveSuccess | 7.7% |

Almost 40% of users drop off before the editing interface is ready to be used; these may be cases where users clicked on the button accidentally or where the interface took too long to load. These sessions were excluded from calculating the edit completion rate above.

Caveat: Some editors do not log certain events, so those steps are blocked off.

| % of sessions reaching | ||||

|---|---|---|---|---|

| desktop | phone | |||

| event | visualeditor | wikitext | visualeditor | wikitext |

| init | 100.0% | 100.0% | 100.0% | 100.0% |

| ready | 92.3% | 42.0% | 95.4% | 97.0% |

| loaded | 92.3% | 41.7% | — | — |

| saveIntent | 13.2% | — | 18.8% | 3.0% |

| saveAttempt | 12.6% | 10.4% | 17.1% | 2.7% |

| saveSuccess | 11.6% | 10.2% | 15.1% | 2.5% |

Time to save

[edit]See T202137.

This metric tracks how long it takes for users to complete their edits (in technical terms, how long it takes for completed sessions to get from the init event to the saveSuccess event). Understanding this can help us optimise the experience to make it more efficient for editors.

Caveat: it can be difficult to interpret the meaning of a higher time to save, as it can mean either that users are making larger edits because they're more comfortable with the editor (then higher is better), or it could also mean that usability issues slow down their editing (then higher is worse).

| editor | desktop | mobile |

|---|---|---|

| visual editor | 1m 40s | 1m 43s |

| wikitext editor | 38s | 48s |

| overall | 43s | 50s |

Overall, users take longer to save on either platform when using the visual editor.

The median time to save is similar on mobile and on desktop, but the distribution is tighter on mobile. This suggests that simple edits take longer to make on mobile, and users also tend not to make long, complex edits on mobile.

Use of the visual and wikitext editing interfaces

[edit]See T202138.

The edit history data uses edit tags to track which edits are made using the mobile site or the visual editor, but it can be difficult to determine which of the remaining edits were made using the wikitext editor and which were made with the rollback tool or third-party tools using the editing API since all these edits are left untagged.

Since the edit event logging does not run for API edits or rollbacks, we can look at the breakdown of saveSuccess events to get a better sense of the relative use of the two editing interfaces.

Caveat: Some first-party editing tools like undo and some third-party tools still involve loading the wikitext editor even when user rarely uses it. These tools do generate edit events and so are included as wikitext edits in the breakdown below; whether this is correct or not is open to interpretation.

| Platform | Visual editor percentage | Wikitext editor percentage |

|---|---|---|

| Desktop (visual editor default wikis) | 14.8% | 85.2% |

| Desktop (wikitext editor default wikis) | 5.7% | 94.3% |

| Mobile | 6.1% | 93.9% |

Edit history metrics

[edit]The metrics in this sections are calculated from edit history preserved by MediaWiki. The dataset included edits from the past year (October 2017 to September 2018), so unlike the EventLogging metrics above, they are not likely to be impacted by one-time events.

They also share some caveats:

- As with the EventLogging metrics, a edit's platform (mobile or desktop) was identified based on whether it was made with the desktop website or MobileFrontend. Since a user can choose to switch to the desktop website on a mobile device or vice versa, the desktop platform includes some edits made on mobile phones or vice versa.

- Because grouping edits made by a single unregistered (IP) editor is unreliable, these metrics look only at registered editors only.

Per-interface retention rates

[edit]See T202146.

Analyzing how likely a user is to continue using a given editing interface can help us to understand how appealing or useful it is and to better target experiences to increase those qualities.

The following analysis calculates per-interface retention by looking at a six-month period and taking each user's first edit in each distinct interface during the study period as the starting point. Then, it looks at whether the user made more edits using the same interface in each of the first 26 weeks after that starting point—that is, whether each user continued to use that specific environment in each of the subsequent weeks, regardless edits made by that user through other editing environments.

| Editor | Second week retention

(week 2) |

Second month retention

(weeks 5-8) |

Sixth month retention

(weeks 23-26) |

|---|---|---|---|

| desktop visual editor | 8.6% | 11.9% | 6.4% |

| desktop wikitext editor | 12.4% | 16.7% | 11.6% |

| mobile visual editor | 4.4% | 6.0% | 2.8% |

| mobile wikitext editor | 5.9% | 8.7% | 5.5% |

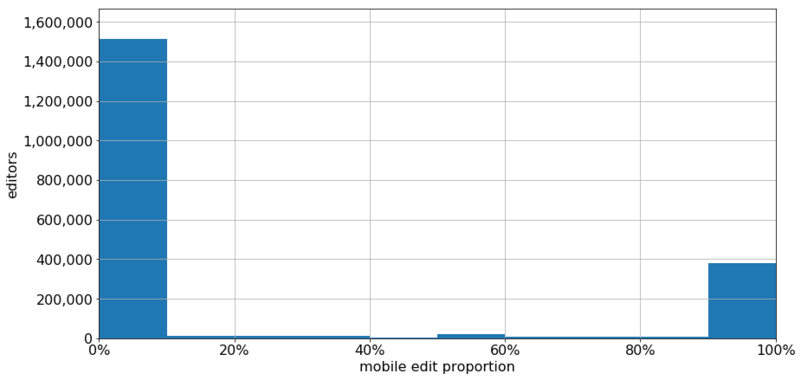

Per-user platform mix

[edit]See T202135.

Some users edit only using the desktop site, some edit only using the mobile site, and some do both. Understanding the exact breakdown can help us understand to what extent the mobile editing experience is currently serving as a users' only method of editing.

| Edits | Desktop only | Mixed | Mobile only |

|---|---|---|---|

| overall | 74.9% | 6.2% | 18.9% |

| 1-9 edits | 74.5% | 3.4% | 22.1% |

| 10-99 edits | 78.5% | 15.5% | 6.0% |

| 100-999 edits | 69.6% | 28.6% | 1.9% |

| 1000+ edits | 66.7% | 33.1% | 0.1% |

Overall, 25% of editors make some mobile edits. Among low-experience editors, these mobile editors are primarily mobile only, but among highly-experienced editors almost all mobile editors make some desktop edits as well.

Explore the end-to-end workflow for current users

[edit]The editing process begins when the user taps edit. Upon tapping edit, the user is taken to a modal dialogue that presents them with three options: edit anonymously, create account, or sign up.

Upon choosing one of the actions and completing it, the user is immediately taken to the source editor, which shows them the wikitext for the article. On many wikis, the user's viewport is filled with template syntax for the infobox, so a new user can't immediately understand how what they're seeing now relates to what they were reading.

The visual editor is available on mobile web, but it is not exposed to the user in a clear way. The edit pencil at the top of the viewport allows users to switch to visual editing, and when they do so, they are presented with the mobile web version of the visual editor. This version of the visual editor has stripped down functionality compared to the desktop version:

- certain things have been disabled, such as image editing

- some editing tasks are only available in a simpler form, e.g. math formula editing

Define pain points in the workflow and larger scale problems outside the workflow

[edit]An important part of improving products is understanding where problems and pain points currently exist—once the problems are understood, improvements can be made. One of the traps it is easy to fall into is to assume that the problems you have with the product are the problems that everyone has—whilst this is a useful first approximation, product teams carry out processes in order to gather data that is more rigorous, more comprehensive, and more reliable.

Process

[edit]Visual Editor Expert Review Framework – This framework was developed by the team designer in conjunction with the Audiences Design team. It's based on The 10 Heuristics for User Interface Design, the Wikimedia Foundation values, and design principles.

Methods

[edit]- Expert review – UX, UI, Accessibility, Language and Engineering professionals will evaluate the tasks provided against the visual editor expert review framework.

- Usability test usertesting.com research method + demographics screener + protocol – Mobile Web Contributors will attempt to complete the tasks provided.

Personas

[edit]

Although we strive to design features for all humans, in the scope of this project we have identified a type of editor that represents the "persona" of users who we believe that we are in the best position to support and delight.

These users have one or more of the following attributes:

- are using the browser on their mobile device to edit

- are using the visual editor within Wikipedia.org to edit

- have interest/motivation to fix or improve existing pages (not create new pages or perform administrative functions)

This focus allows the product team to craft a rubric for prioritizing attention to contributor attributes.

The prioritization is:

- Experienced editors who are experience on mobile

- Experienced editors who are inexperienced on mobile

- Inexperienced editors who are experienced on mobile

- Inexperienced editors who are inexperienced on mobile

Usability test

[edit]

Visual Editor on Mobile Web Usability Testing via Usertesting.com:

Of the 6 tasks tested (getting to edit mode, adding and formatting text, saving edits, adding internal links and adding citations), only the first 3 were performed without much difficulty or confusion. Though the participants (who hadn’t previously edited Wikipedia) gave generally positive feedback about the editing process, one participant gave up on the last task in frustration. Not only did participants experience bugs and various feature elements needing improvement, they were sometimes entirely unaware that they didn’t complete a task or completed it incorrectly. Given that the tasks were relatively basic/MVP-level, further design/research iteration is recommended.

Heuristic analysis

[edit]In addition to performing a usability study, a study performed by internal (within the Wikimedia Foundation) and industry experts provided insight into the quality of the tool. A dozen experts were chosen in the fields of product, engineering, accessibility, design and RTL scripts. The experts took the usability test and then rated the tool against a values-based rubric that the product designer created based off of Jakob Neilsen's 10 Heuristics for User Interface.

Overall, the product did not perform well against the criteria in the rubric. Here is a report-card themed summary of that information. Each report card mirrors a value presented in the Wikimedia Mission.

Report Card 1: We Welcome and Cherish Our Differences

[edit]| Dimension | Definition | Grade |

|---|---|---|

| Learnability | The structure is simple enough that it could be easily learned | To some degree |

| Language Comprehension | The in-tool terms, references, and instructions are obvious and written using simple, jargon-free language | To some degree |

| Proper Casing * | Case is appropriately used throughout labels, titles, and copy | Yes |

| Visibility of System Status | The system always keeps me informed about what is going on, through appropriate feedback within reasonable time | To some degree |

| Nomenclature | I do not have to wonder whether different words, situations, or actions mean the same thing and all of the words follow platform conventions | Yes |

| Conciseness | System Messages and Labels are succinct and use distinct wording | Yes |

| Accessibility | This product is conforming to WCAG level AA as requirement and goes beyond where technically possible. | No |

* Letter case is only relevant for languages that are written in Latin, Cyrillic, Greek, and Armenian alphabet.

Report Card 2: We are in this Together

[edit]| Dimension | Definition | Grade |

|---|---|---|

| Assistance | There was an obvious way to ask for help | No |

| Documentation | Documentation for using the Visual Editor (aka "help") is clearly written | No |

| Explanatory | There are tool tips and information along the way that serve as guide posts for what I want to do | No |

| Instructional | I'm guided through a process to complete my goal (the edit) | To some degree |

| Recognition rather than recall | I know what to do, in order to accomplish my goal, without needing help | To some degree |

| Error Prevention | I didn't run into any system errors | To some degree |

| Error Recovery | Error messages were expressed in plain language (no codes), precisely indicate the problem, and constructively suggest a solution | No |

Report Card 3: We are Inspired

[edit]| Dimension | Definition | Grade |

|---|---|---|

| Frustration | The experience was satisfying and didn't cause me frustration | No |

| Freshness | Visual components feel crisp and contemporary | To some degree |

| User Control and Freedom | When I had to exit a task, I was easily able to do so | To some degree |

| Content first design | It is obvious while consuming content that there is a way to edit it | To some degree |

| Joy | It felt good when I finished an edit | No |

Report Card 4: We engage in Civil Discourse

[edit]| Dimension | Definition | Grade |

|---|---|---|

| Assistance | There was an obvious way to ask for help | No |

| Documentation | Documentation for using the Visual Editor (aka "help") is clearly written | No |

| Explanatory | There are tool tips and information along the way that serve as guide posts for what I want to do | To some degree |

| Instructional | I'm guided through a process to complete my goal (the edit) | To some degree |

| Organization | You can easily find the functions that you need, when you need to find them | To some degree |

Make proposals for addressing identified pain points

[edit]Two broad categories for ways to address the problem:*

1. Look at the UX issues in the end-to-end workflow and improve (and, possibly, remove) steps: e.g. is it correct to have the first step be a full-screen three option modal? do we have evidence that it's bad, or just intuition?

2. Come up with alternative visual-based editing experiences, and expose those to users

Technical research

[edit]- What are the technical constraints that make the current VE mobile experience difficult to improve or change?

- What could we do to make that easier?

- What things have been persistent challenges due to those technical constraints?

- Would a different visual-based system have different technical constraints?

Current software constraints

This section includes issues that exist due to the current state of visual editing software and have the potential to impact mobile editing.

Platform

- Mobile Safari does strange viewport scrolling when the keyboard is opened, mostly based around the assumption that you are focussing a short input field. MobileFrontend has some hacks to work around these, but they may need more tweaking, especially to work with the OOUI dialog system

- Current Action: improve viewport scrolling (Phabricator ticket to be added shortly)

- Mobile OSes often unload pages from memory if you switch tab or application, especially iOS. This would result in the editor being reloaded when you return to that tab. Fortunately with auto-save this is somewhat less catastrophic, but there may be more work to remember other user state (e.g. if the user was in the middle of creating a citation)

- Screen space constraints affect certain editing tasks, e.g. gallery editing: currently thumbnails and editing toolbars are squashed onto a small screen; difficult to solve without rethinking the workflow

- Current Action: improve consistency of mobile dialogs (T202575)

Code Repositories

[edit]- The existing mobile wikitext editor code is spread between two repositories (Minerva and MobileFrontend), which makes making and reviewing changes more difficult. Some code specific to mobile visual editor is in yet another repo, VisualEditor. Streamlining the repositories will improve performance for all users

- Current Action: move mobile editor code (T198765)

Performance

[edit]- Mobile web is much slower than web apps, so we may need to anticipate actions not happening immediately and guard against it in the UI (e.g. prevent buttons from being clicked twice)

- Current Action: current user testing will determine whether this is a concern we need to address

Input and language support

[edit]Types of input method can vary significantly

- Input via hardware keyboard / software keyboard / full-screen handwriting / voice / ...

- Candidates inserted as single code units / grapheme clusters / words / phrases

- Different paradigms for text correction

Current Action: see VisualEditor mobile IME analysis for more information on research to better understand these constraints

Mobile development limitations

[edit]These limitations are concerns we must bear in mind as we make design and product decisions.

Platform-specific limitations

[edit]- Mobile Safari (iOS) does not track the visible viewport size, which makes it extremely challenging to attach toolbars to the bottom of the viewport (which the Google Docs App does, for example)

- Some browsers don't use read/write from/to the clipboard programmatically, so we can't make our own copy/paste buttons in the UI

- Safari's webkit engine in general is considered much buggier than Chrome's blink

- We have less control over text input than in apps. For example in Mobile Safari a context menu with [B/I/U/Copy/Paste/...] is shown on text selections, which we can't suppress

Performance

[edit]- Devices in the wide variety of targeted markets are extremely variable in form both factor and performance. We will need to account for that variability in planning

- Bandwidth, latency and intermittent connections will vary greatly across target audiences. Variability in performance means that certain intensive processes (e.g. visual diffs of long documents) will have particularly variable user experience

Target wikis

[edit]To measure and quantify the impact of the interventions that are better being made, the team has identified a set of target wikis where we will focus our efforts.

The criteria are Wikipedias sorted by majority-mobile editors percentage with over 200 monthly editors. From this list, some larger wikis have been removed in order to target medium-sized wikis that are likely to benefit from the changes we hope to make. The list of target wikis is below.

| Target | Lang code | Script type | Directionality | Proposed target IMEs |

|---|---|---|---|---|

| Bengali Wikipedia | bn | Indic (Bangla) | LTR | |

| Hindi Wikipedia | hi | Indic (Devanagari) | LTR | |

| Arabic Wikipedia | ar | Arabic | RTL | |

| Persian Wikipedia | fa | Arabic (Persian) | RTL | Android Gboard Persian

Android Gboard Persian Handwriting |

| Indonesian Wikipedia | id | Latin | LTR | |

| Marathi Wikipedia | mr | Indic (Devanagari) | LTR | |

| Malay Wikipedia | ms | Latin | LTR | |

| Malayalam Wikipedia | ml | Indic (Malayalam) | LTR | Android Gboard abc→മലയാളം |

| Thai Wikipedia | th | Thai | LTR | Android Gboard Thai keyboard |

| Azerbaijani Wikipedia | az | Latin | LTR | |

| Albanian Wikipedia | sq | Latin | LTR | |

| Hebrew Wikipedia | he | Hebrew | RTL | Android Gboard Hebrew keyboard

Google voice typing עברית (ישראל) |

For our purposes, these can be broken down into 3 groups based on the attributes of their language:

| Group 1: South Asian | Group 2: Right-to-left | Group 3: Latin script |

|---|---|---|

| Bengali | Hebrew | Indonesian |

| Hindi | Persian | Malay |

| Marathi | Arabic | Albanian |

| Malayalam | Azerbajani |

Validate thinking and stimulate discussion with both senior management and the communities

[edit]We are asking for thoughts and reactions on these pain points from as many communities as possible:

- Have you experienced similar frustrations with the visual editor on the mobile web?

- What do you think works well with the visual editor on the mobile web?

- Did we miss anything?

Please join the conversation on the the talk page.

Next steps

[edit]- Remote team brainstorms

- The Editing team will split into small groups of around four people (breakout groups), to brainstorming and discuss items and problems that were raised in the heuristic and usability studies.

- Team shareout 1

- The breakout groups will share their brainstorming with the other breakout groups.

- Design intervention planning

- The product manager and designer will create some proposals for how the problems can be addressed.

- Feasibility audit

- The proposals will be audited for their feasibility from multiple perspectives, including technical difficulty, design complexity, social blockers, and more.

- Team shareout 2

- The team will share the findings of the audits and discuss further.

- Call for community and management input

- A call for input will be circulated so that members of our communities and Wikimedia Foundation management can give input on the proposals and thoughts on the next steps. (Feedback is, of course, welcome at any stage in this process, as well as in this specific step.)

- Triage Phabricator to reflect current thinking