Content translation/Technical Architecture

Abbreviations and glossary

[edit]- CX - Content Translation

- MT - Machine Translation

- TM - Translation memory

- Segment - Smallest unit of text which is fairly self-contained grammatically. This usually means a sentence, a title, a phrase in a bulleted list, etc.

- Segmentation algorithm - rules to split a paragraph into segments. Weakly language-dependent (sensible default rules work quite well for many languages).

- Parallel bilingual text - two versions of the same content, each written in a different language.

- Sentence alignment - matching corresponding sentences in parallel bilingual text. In general this is a many-many mapping, but it is approximately one-one if the texts are quite strict translations.

- Word alignment - matching corresponding words in parallel bilingual text. This is strongly many-many.

- Lemmatization - also called stemming. Mapping multiple grammatical variants of the same word to a root form; e.g. (swim, swims, swimming, swam, swum) -> swim. Derivational variants are not usually mapped to the same form (so happiness !-> happy).

- Morphological analysis - mapping words into morphemes, e.g. swims -> swim/3rdperson_present

- Service providers - External systems which provide MT/TM/Glossary services. Example: google

- Translation tools - Translation support tools - Translation aids - Translation support - Context aware translation tools like MT, Dictionary, Link localization

- Link localization - Converting a wiki article link from one language to another language with the help of wikidata. Example: http://en.wikipedia.org/wiki/Sea becomes http://es.wikipedia.org/wiki/Mar

Introduction

[edit]This document tries to capture the architectural understanding for the Content Translation project. This document evolves as the team goes to the depths of each component.

Architecture considerations

[edit]- The translation aids that we want to provide are mainly from third party service providers, or otherwise external to the CX software itself.

- Depending on the external system capability, varying amount of delays can be expected. It emphasises the need for server-side optimizations of service provider APIs such as

- We are providing a free-to-edit interface, so we should not block any edits (translation in the context of CX) if the user decides not to use translation aids. Incrementally we can prepare all translation aids support and provide them in the context.

- caching of results from them

- proper scheduling to better utilize capacities and to operate in the limits of API usage

Server communication

[edit]- We can provide translation aids for the content in increments as and when we receive them from service providers. We do not need to wait for all service providers to return data before proceeding.

- For large articles, we will have to do some kind of paging mechanism to provide this data in batches.

- This means that client and server should communicate in regular intervals or as and when data is available at server. We considered traditional http pull methods (Ajax) and push communication (websockets).

Technology considerations

[edit]Client side

[edit]- jQuery

- contenteditable with optional rich text editor

- LESS, Grid

Server side

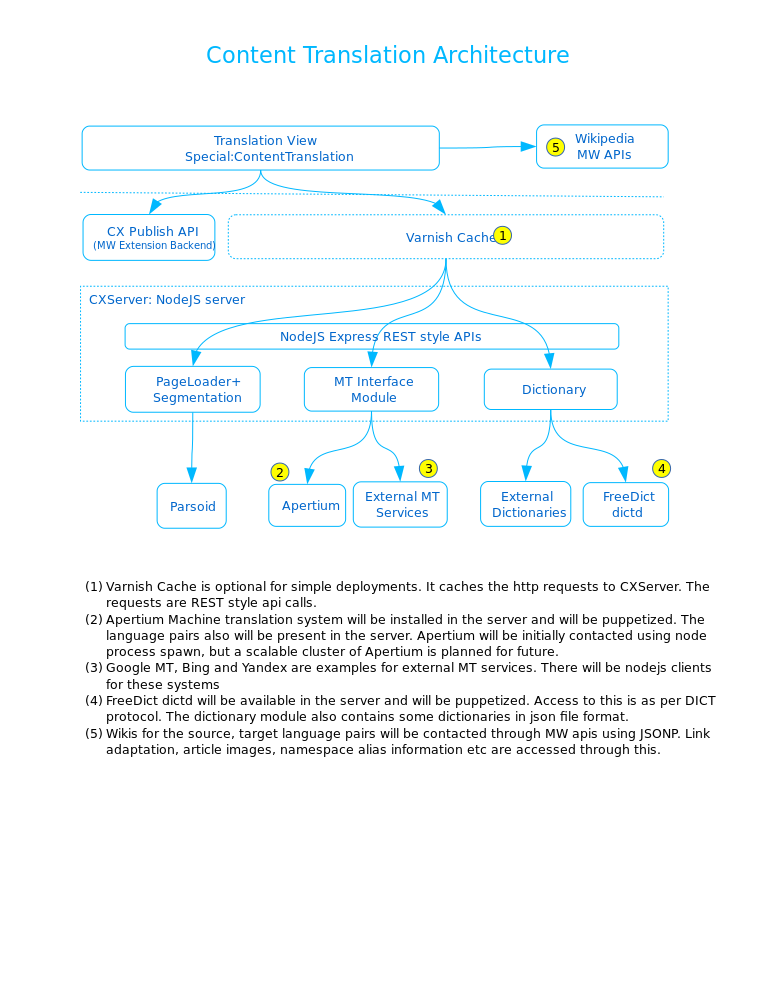

[edit]- Node.js with express

- Varnish Cache

Architecture diagram

[edit]Scalability

[edit]Node instances

[edit]Node.js built-in cluster module-based instance management system. Currently the code is borrowed from Parsoid. We have a cluster that forks express server instances depending on number of processors available in the system. It also fork new processes for replacing instances killed/suicided.

This approach uses Node.js built-in in cluster. A better alternative is node module cluster from socket.io developers http://learnboost.github.io/cluster/

Security

[edit]To be designed

WMF Infrastructure

[edit]To be designed. https://wikitech.wikimedia.org/wiki/Parsoid gives some idea

The content itself might be sensitive (private wikis) and thus should not always be shared through translation memory. We also need to restrict access to service providers, especially if they are paid ones.

Segmentation

[edit]See Content_translation/Segmentation

Workflow

[edit]See Content_translation/Workflow

Saving the work

[edit]See Publishing

Machine learning

[edit]We need to capture translators improvements for the translation suggestions (for all translation aids). If we are allowing free text translation, the extent to which we can do this is limited as explained in the segment alignment section of this document. But for the draft/final version of translation, we need to capture the following to our CX system to continuously improve CX system and potentially provide this to service providers

- Dictionary corrections/improvements - Whether the user used CX suggestions or used a new meaning. Also out of n suggestions, which suggestion was used.

- Link localization-TBD: Can we give this back to wikidata?

- MT - Edited translations

- Translation memory - if they are used, it affects the confidence score

- Segmentation corrections

- TBD: Whether we are doing it automatically/when user saves/publishes

- TBD: How exactly we analyse the translation create semantic information for feeding the above mentioned systems

One potential for this is parallel corpora for many language pairs, which is quite useful for any kind for morphological analysis.

User interface

[edit]Segment alignment

[edit]We allow free text editing for translation. We can give word meaning, translation templates. But since we are allowing users to summarize, combine multiple sentences, choice of not translating something, we will not be able learn the translation.

We need to align the source and target translations - example: translatedSentence24 is the translation of originalSentence58

This context is completely different than a structured translation we do with Translate extension. The following type of edits are possible

- Translator combines multiple segments to single segment - like summarising

- Translator splits a single segment to multiple segments to construct simple sentences

- Translator leaves multiple segments untranslated

- Translator re-orders segments in a paragraph or even in multiple paragraphs

- Translator paraphrases content from multiple segments to another set of segments

TODO: replace the word alignment diagram opposite with a suitable segment alignment diagram

The CX UI provides a visual synchronization for segments wherever possible. From the UI perspective, word and sentence alignment will help user orientation but they are not a critical component that will break the experience if it is not 100% perfect.

Alignment of words and sentences are useful to provide additional context to the user. When getting info on a word from the translation it is good to have it highlighted in the source so that it becomes easier to see the context in which the word is used. This is something not illustrated in the prototype but it is something that google translate does, for example.

Pau thinks it is totally fine if the alignment is not working in all cases. For example, we may want to support it when the translation is done by modifying the initial template, but it is harder if the user starts from scratch so we don't do it in the later case.

The following best try approach is proposed

- We are considering the source article annotated with identifiers for segments. When we use machine translation for template, we can try copying this annotations to template. The feasibility of copying annotations to template will depend on many things. (a) The ability of MT providers to take html and give the translations back without losing these annotations (b) Mapping the link ids by using href as key from our server side (c) If MT backend not available, we are giving source article as translation template- no issue there

- In the translation template, if user edits, it is possible that the segment markup get destroyed. It can happen when two sentences combined or even just because of deletion of first word. But wherever the annotations remains, it should be easy for us to do visual sync up.

- If we don't get enough matching of annotations(ids), we ignore.

In iterations, it is possible to improve this approach in multiple ways

- Consider incorporating sentence alignment using minimal linguistic data for simple language pairs if possible. Example: English-Welsh (?)

- Consider preserving contenteditable nodes in a translation template so that we have segment ids to match (Follow the VE team developments on this area)

An approach to be evaluated: Instead of making the whole translation column content editable, when a translation template is inserted, mark each segment as contenteditable. This will prevent from destruction of segments.

Caching

[edit]See Caching

Machine Translation

[edit]Dictionary

[edit]- Google dictionary https://code.google.com/p/google-ajax-apis/issues/detail?id=16

- There is one dictionary data object for the whole article.

- To list entries on a per-segment level would get extremely repetitious.

- Morphology: The dictionary data contains entries in citation form. However, matched text may contain conjugated forms (note that “chemical” matched “chemicals” in the example above).

- What we need is a pluggable architecture that can be used for “practical” “minimal” stemmers

- Stemmer which produce false positives is acceptable because it is acceptable to give incorrect meaning as suggestion. Also acceptable to not give meaning for words always

Translation Memory

[edit]Notes

[edit]- TM suggestions are stored per-segment, as there is less repetition and the rankings only make sense per-segment.

- “match” means how closely the TM source segment matches the segment being translated. This score may be weighted by metadata (e.g. an article on Chemistry may give more weight to a source segment with “Chemistry” as a tag).

- “confidence” means how reliable the translation is. This would be a property of many factors, e.g.: translation review, article history, statistical reliability of translator.

- The TM suggestion search is timestamped, as it may be computed ahead of time.

- A more efficient “update search” can be done to refresh suggestions, just considering newer records.

- The client side suggester can also make use of this info.

Link localization

[edit]This should be done with the help of Wikidata. Also to be cached.