Selenium/Ruby/Browser testing user satisfaction survey

This page is obsolete. It is being retained for archival purposes. It may document extensions or features that are obsolete and/or no longer supported. Do not rely on the information here being up-to-date. See Selenium instead. |

The goal of the survey was to get a baseline of user satisfaction in our browser-testing infrastructure/tooling. Target audience were members of a few mailing lists: wikitech-l, engineering and qa. The survey was created as a Google docs form. It had 5 sections, each section had a few questions. The last question in each section was a free form text field, so participants could leave any feedback. Free form answers are summarized, as the survey privacy statement required. Most of the questions had simple 5 level linear scale, from 1-5, or from :'( to :D.

| :'( | 1 |

| :( | 2 |

| :| | 3 |

| :) | 4 |

| :D | 5 |

18 people participated in the survey. Questions got from 8 to 16 answers. All details (including all questions) can be found in T131123 Phabricator task.

Jenkins

[edit]Take aways:

- Participants of the survey are pretty happy about stability and speed of mwext-mw-selenium jobs.

- Note that right now some mwext-mw-selenium jobs are failing with hard to reproduce failures, and some jobs take hours to run.

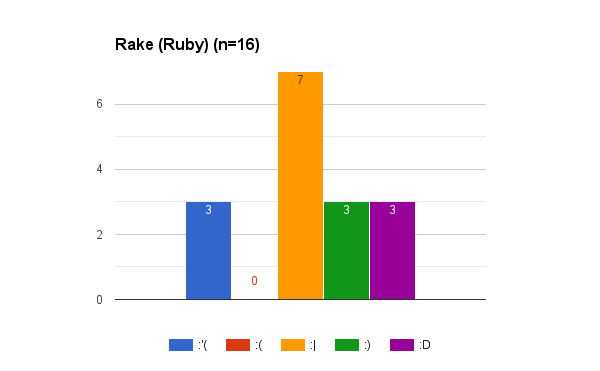

- Most of the people also know how to use continuous integration entry points for Ruby (Rake) and JavaScript (Grunt).

Survey comments (paraphrased):

- The majority of the failures were related to CI, not actual failures of tested code. They are having trouble debugging and do not understand how our continuous integration works.

| Gerrit triggered Selenium+Jenkins jobs (mwext-mw-selenium, after patch set submission) | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

Jobs are stable enough (n=16)

|

||||||||||||||||

|

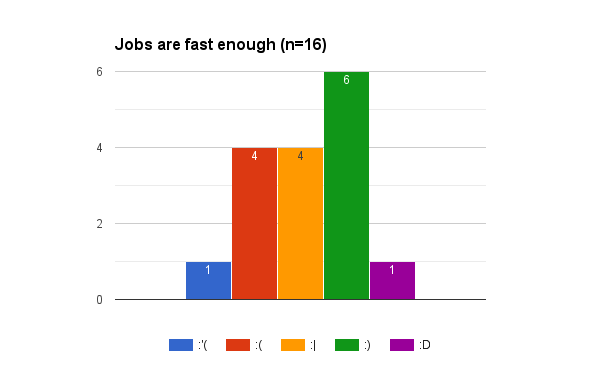

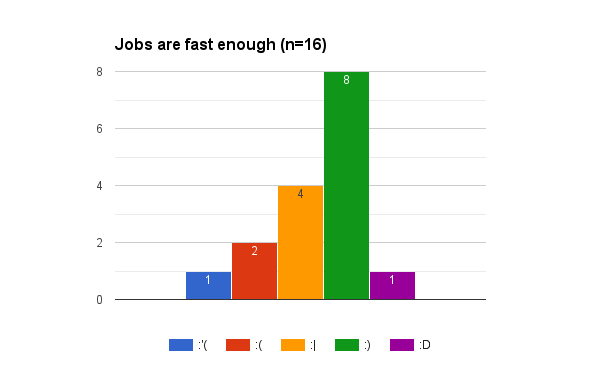

Jobs are fast enough (n=16)

|

||||||||||||||||

| Time triggered Selenium+Jenkins jobs (selenium*, daily) | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

Jobs are stable enough (n=16)

|

||||||||||||||||

|

Jobs are fast enough (n=16)

|

||||||||||||||||

| I know how to use continuous integration entry points | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

Rake (Ruby) (n=16)

|

||||||||||||||||

|

Grunt (JavaScript) (n=16)

|

||||||||||||||||

Ruby

[edit]Take aways:

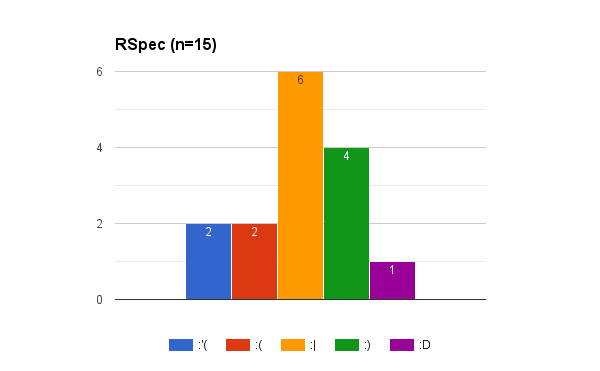

- It is interesting that (slightly) more people are happy with Ruby testing tools (mediawiki_selenium, Cucumber, RSpec) than those who are not.

- The majority of the people did not have strong feelings. We expected more people being unhappy with the Ruby testing tools.

Survey comments (paraphrased):

- Ruby is not the optimal choice for testing tools, another language that is more popular with MediaWiki developers would be a better choice. Some libraries we use are poorly documented.

- Ruby is not the best language in our context, Node.js would be a better choice.

- Having less languages on the stack would simplify things, but Ruby has good testing tools, so the decision is not easy.

| How do you feel about | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

mediawiki_selenium (n=15)

|

||||||||||||||||

|

Cucumber (n=14)

|

||||||||||||||||

|

RSpec (n=15)

|

||||||||||||||||

JavaScript

[edit]Take aways:

- JavaScript testing framework Malu is in it's early development and this survey was probably the first time some of the participants have heard about it. That might explain the highest

:|(or 3) response about Malu. 11 participants (69%) gave that response, the highest number of votes that any other question got. Participants were in general positive towards Mocha and QUnit.

Survey comments (paraphrased):

- Did not hear about Malu before and did not find much documentation when they went looking. No opinion on Mocha. QUnit does the job well, but has it's problems.

- Not familiar with Malu but likes the idea. Prefers Mocha to QUnit but not sure if another testing framework would cause problems.

| How do you feel about | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

Malu (n=16)

|

||||||||||||||||

|

Mocha (n=15)

|

||||||||||||||||

|

QUnit (n=15)

|

||||||||||||||||

Selenium

[edit]Take aways:

- It is surprising that participants were pretty happy about writing tests.

- It was not a surprise that the feelings were not the same when it came to fixing failed tests.

Survey comments (paraphrased):

- Lot of time is spent fixing tests because the testing environment is not stable and tests are not written in a good way, the latter caused by lack of understanding of the framework.

| How do you feel about | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

Writing tests (n=16)

|

||||||||||||||||

|

Fixing failed tests (n=15)

|

||||||||||||||||

Getting help

[edit]Take aways:

- Our documentation (at mediawiki.org) is not good enough nor up to date (for example Browser testing), but participants were more happy with it than expected.

- Looks like the most helpful tool was IRC (#wikimedia-releng), and to some degree Phabricator (#browser-tests, #browser-tests-infrastructure), and mailing lists (qa).

Survey comments (paraphrased):

- Documentation not great. Getting started documentation targeting Mac. Getting started tutorial should be as simple as possible, the rest of the documentation should be moved to other pages.

- Would like to know more about testing infrastructure, preferable as a session.

- Hard to get help. Better process for getting help needed. Hard to schedule pair programming.

| Rate your experience getting help via the below methods | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

mediawiki.org (n=16)

|

||||||||||||||||

|

IRC (n=16)

|

||||||||||||||||

|

Phabricator (n=16)

|

||||||||||||||||

|

Mailing list (n=16)

|

||||||||||||||||

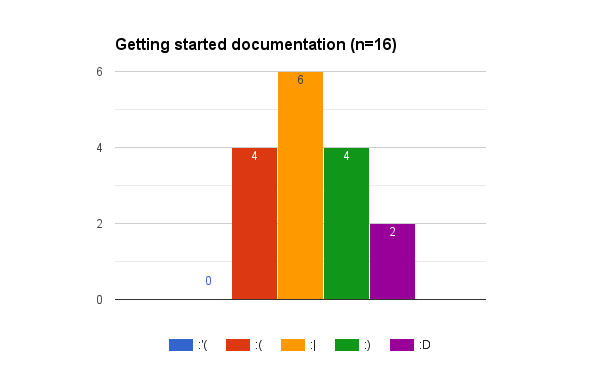

Documentation

[edit]Take aways:

- Documentation is important. There is never enough of it, and what is out there gets outdated quickly.

- Both our getting started (example: Setup instructions) and general documentation (example: Browser testing) got more positive than negative answers.

| How do you feel about | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

Getting started documentation (n=16)

|

||||||||||||||||

|

Documentation on how to do testing (n=16)

|

||||||||||||||||

I need help

[edit]Take aways:

- We were offering training in the past, but we were not sure what type of training people prefer.

- Based on the answers, participants equally like pairing, on-line and in-person workshops.

- That is true for both getting started sessions and advanced sessions (like fixing failed tests).

| I need help | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

I need help with getting started with testing (n=9)

|

|||||||||||||

|

I need help with fixing failed tests (n=8)

|

|||||||||||||